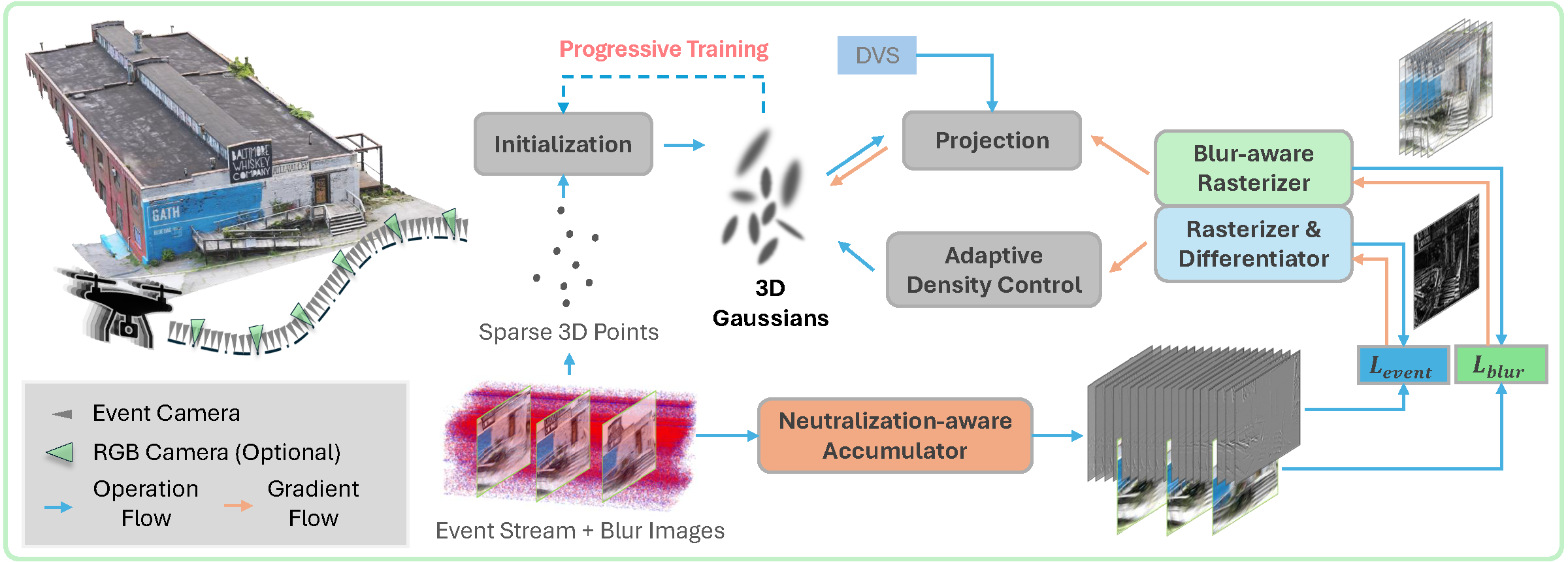

Event3DGS: Event-Based 3D Gaussian Splatting for

High-Speed Robot Egomotion

CoRL 2024

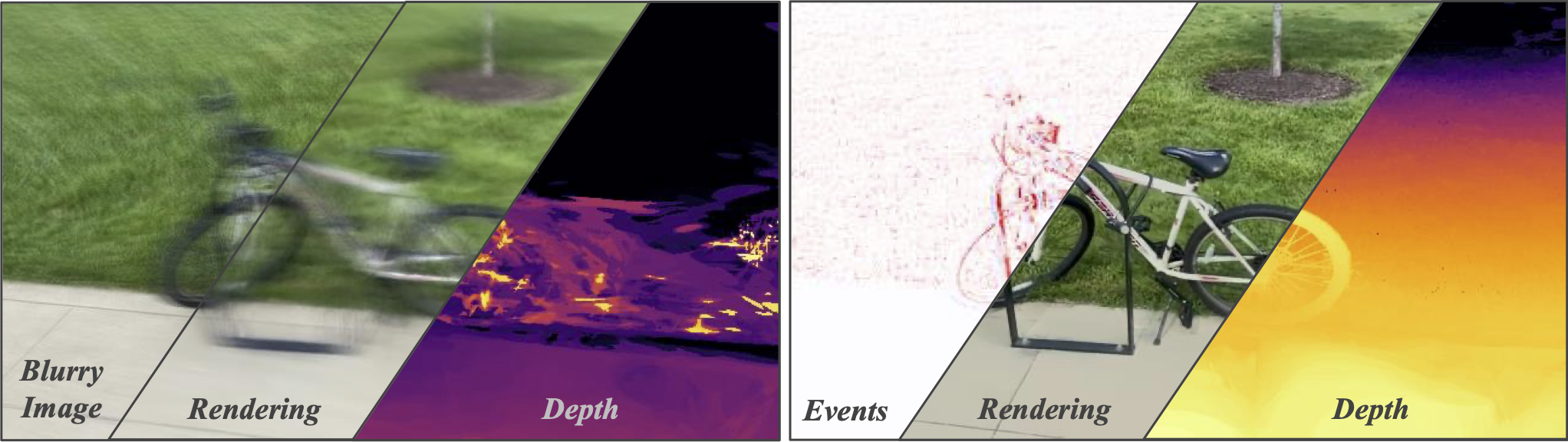

Left: Conventional (frame-based) 3D Gaussian Splatting fails to reconstruct geometric details due to motion blur caused by high-speed robot egomotion. Right: By exploiting the high temporal resolution of event cameras, Event3DGS can effectively reconstruct structure and appearance in the presence of fast egomotion.